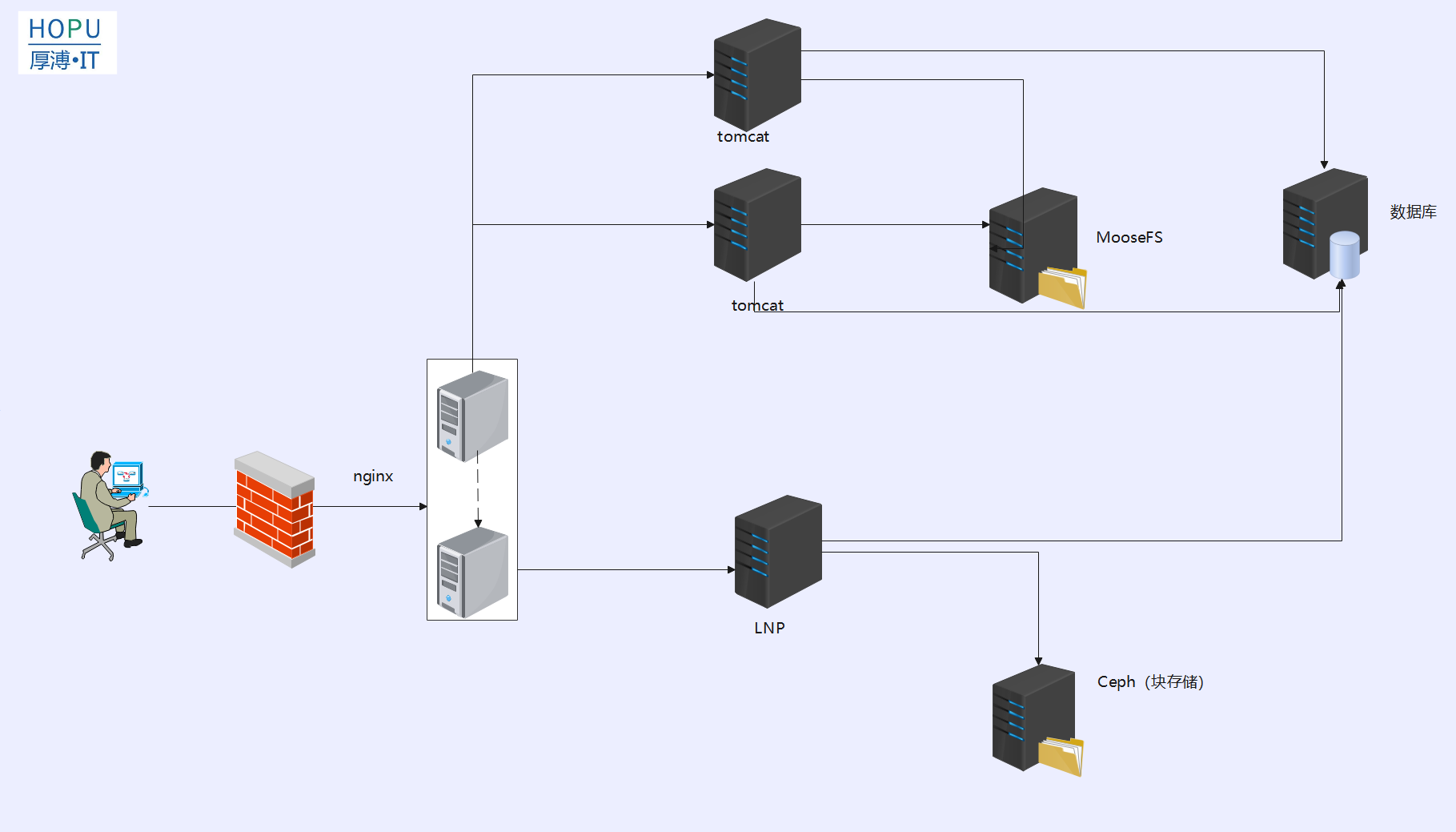

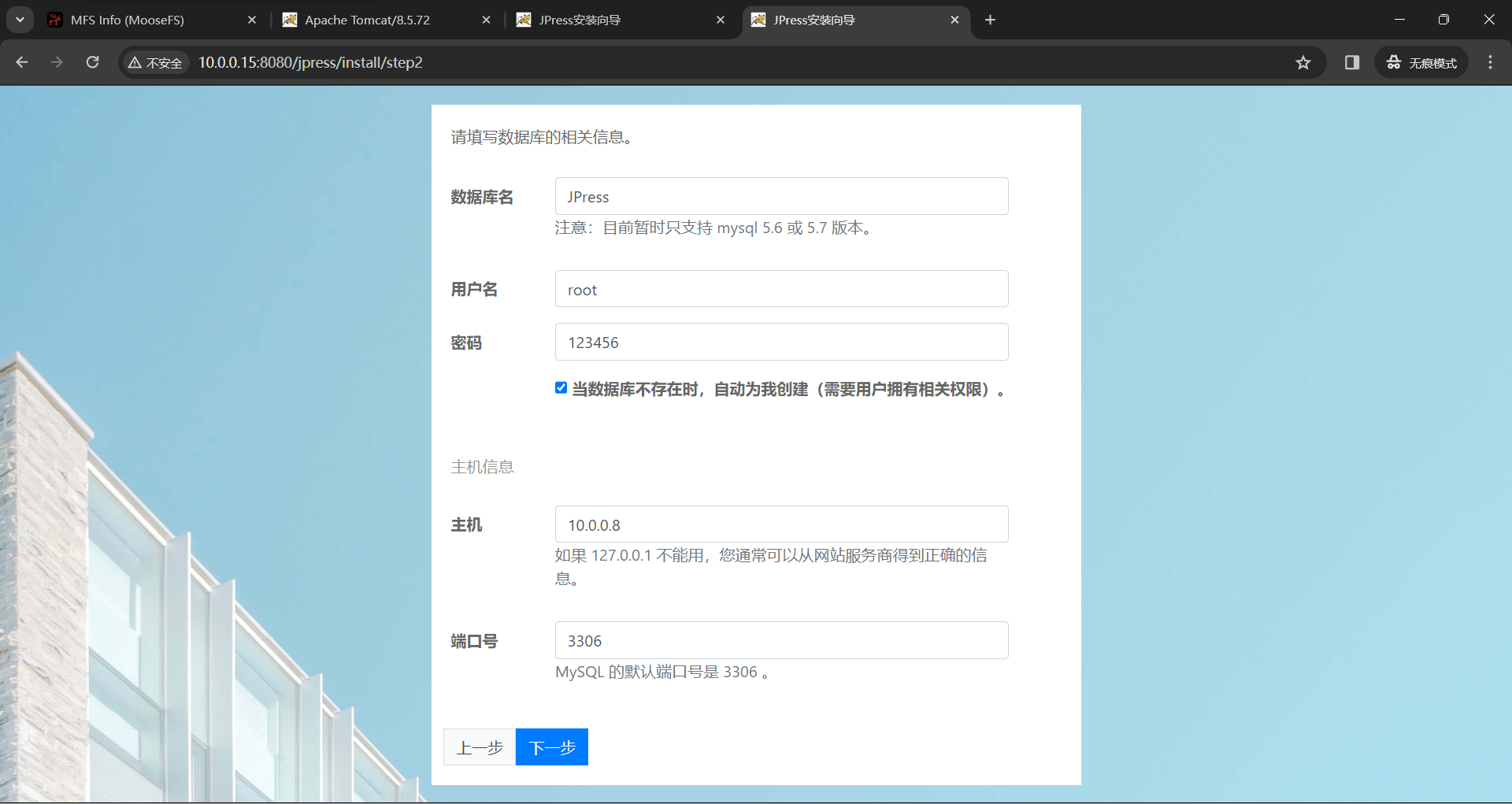

MFS、Ceph综合实战

1、用户访问后端nginx的流量要求通过防火墙转发控制,仅放行80和443,53,22号端口的流量

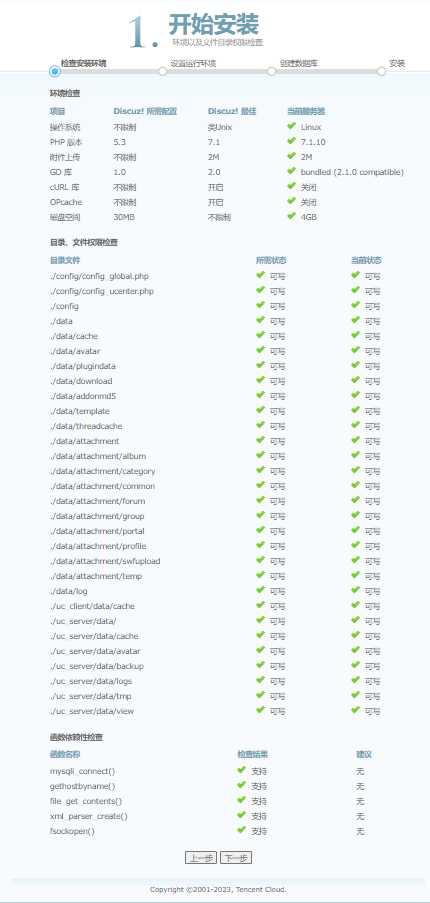

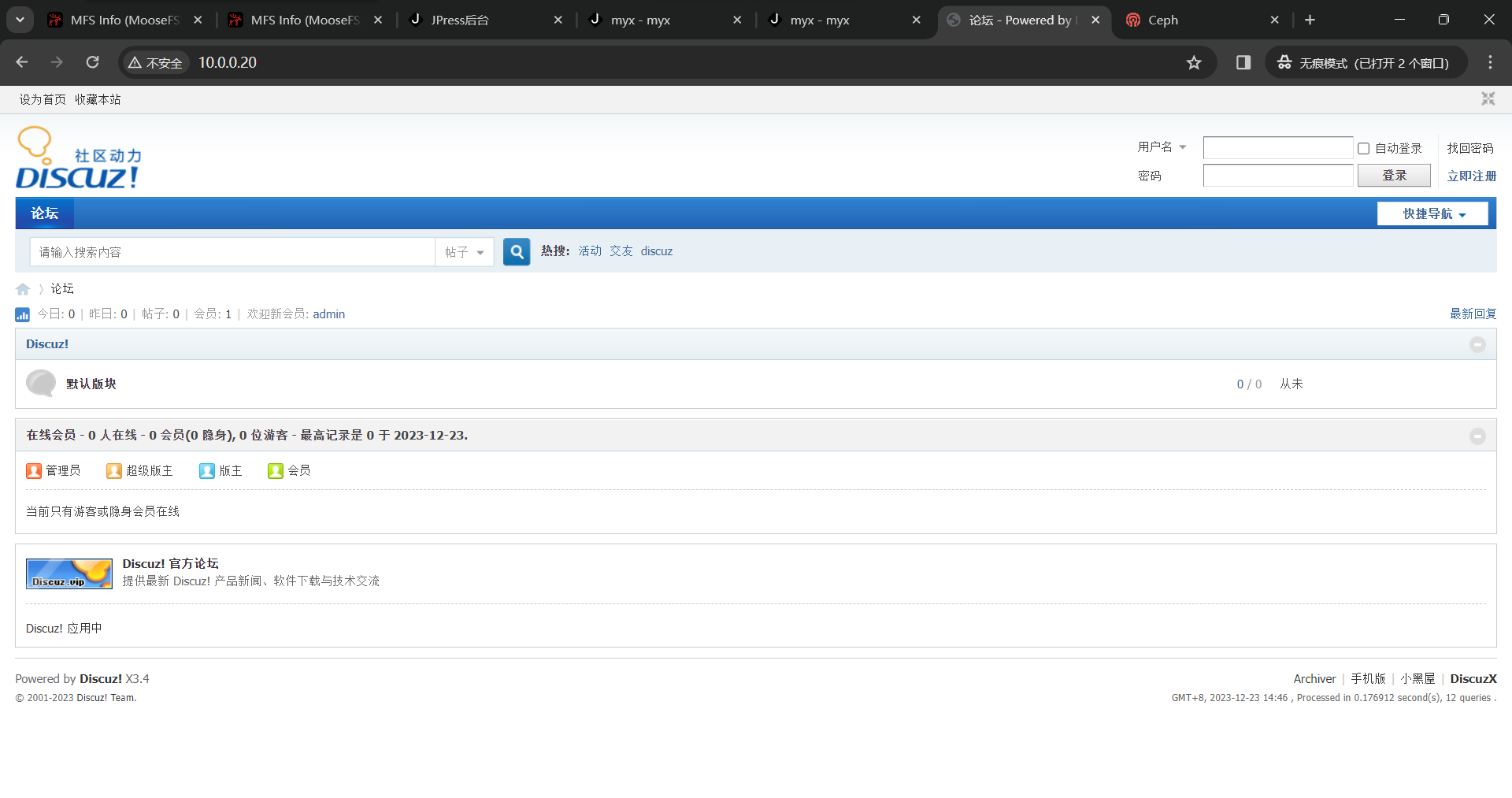

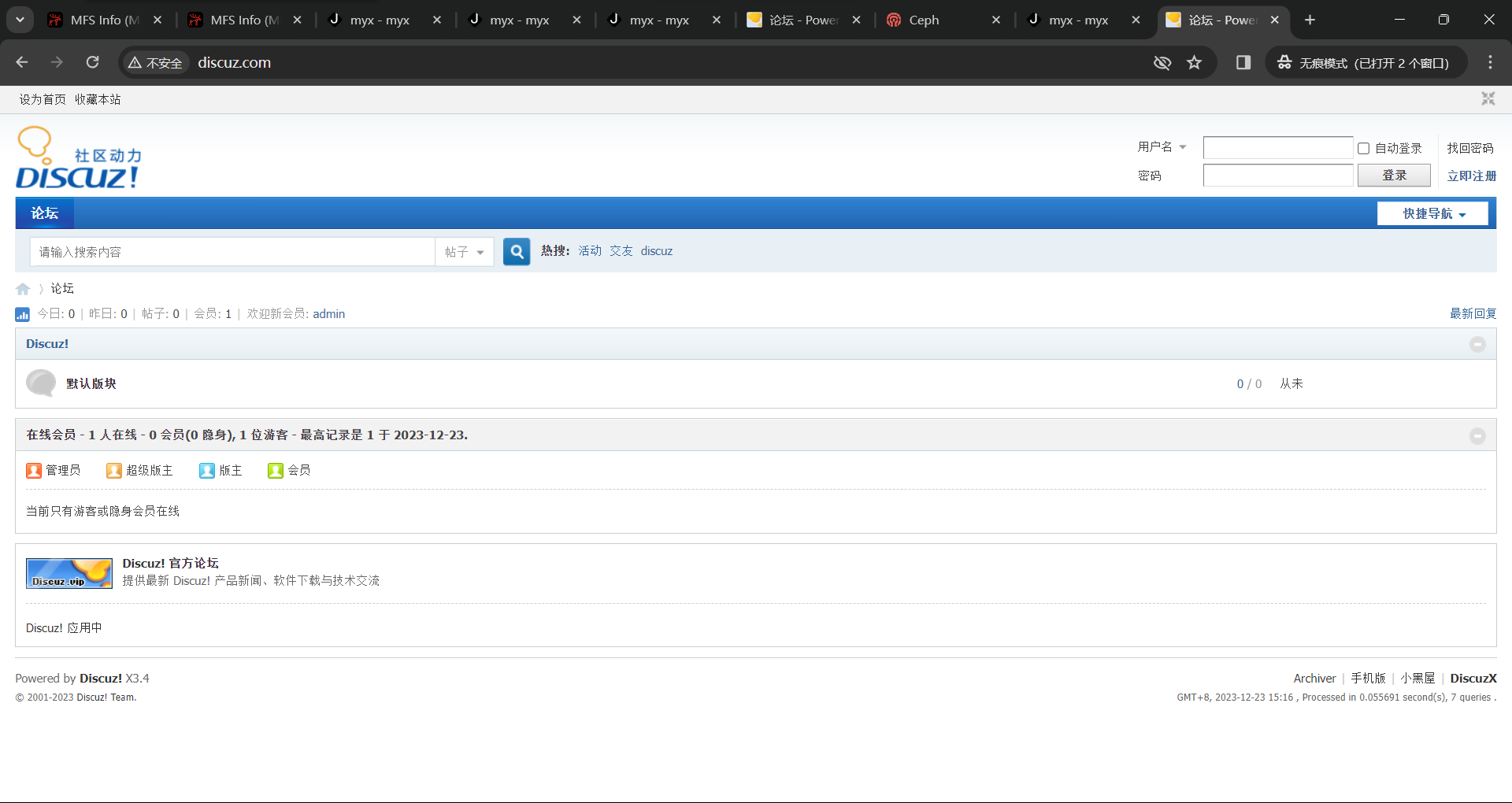

2、Nginx实现反向代理至后端的服务器,服务器的环境分为JAVA环境和PHP环境,tomcat服务运行jpress程序,并能通过域名访问,而LNMP环境下,要求部署一套Discuz论坛程序,两套环境公用一套数据库服务器,彼此之间不受影响

3、Tomcat后端的存储采用MFS作为后端的分布式存储性系统

4、LNMP环境采用ceph作为后端的分布式存储系统

IP地址规划如下:

| IP地址 | 主机名 | 角色 |

|---|---|---|

| 10.0.0.8 | Mysql | 数据库(JPress、ultrax) |

| 10.0.0.9 | Iptables | 防火墙(80、443、53、22) |

| 10.0.0.10 | mfs-master | 元数据节点 |

| 10.0.0.11 | mfs-logger | 元数据日志节点 |

| 10.0.0.12 | Chunkservers-1 | 数据存储节点-1 |

| 10.0.0.13 | Chunkservers-2 | 数据存储节点-2 |

| 10.0.0.14 | tomcat-1 | Tomcat-服务器1 |

| 10.0.0.15 | tomcat-2 | Tomcat-服务器2 |

| 10.0.0.16 | Ceph-admin | 管理节点 |

| 10.0.0.17,10.0.10.17 | node-01 | mon、mgr、osd(/dev/sdb) |

| 10.0.0.18,10.0.10.18 | node-02 | mon、mgr、osd(/dev/sdb) |

| 10.0.0.19,10.0.10.19 | node-03 | mon、mgr、osd(/dev/sdb) |

| 10.0.0.20 | LNP | Discuz论坛 |

| 10.0.0.21 | Nginx-1 | http://www.jpress.com/ |

| 10.0.0.22 | Nginx-2 | http://www.discuz.com/ |

Mysql

# 编译安装

mysql> ALTER USER USER() IDENTIFIED BY '123456';

Query OK, 0 rows affected (0.02 sec)

mysql> grant all privileges on *.* to 'root'@'%' identified by '123456';

Query OK, 0 rows affected, 1 warning (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.02 sec)

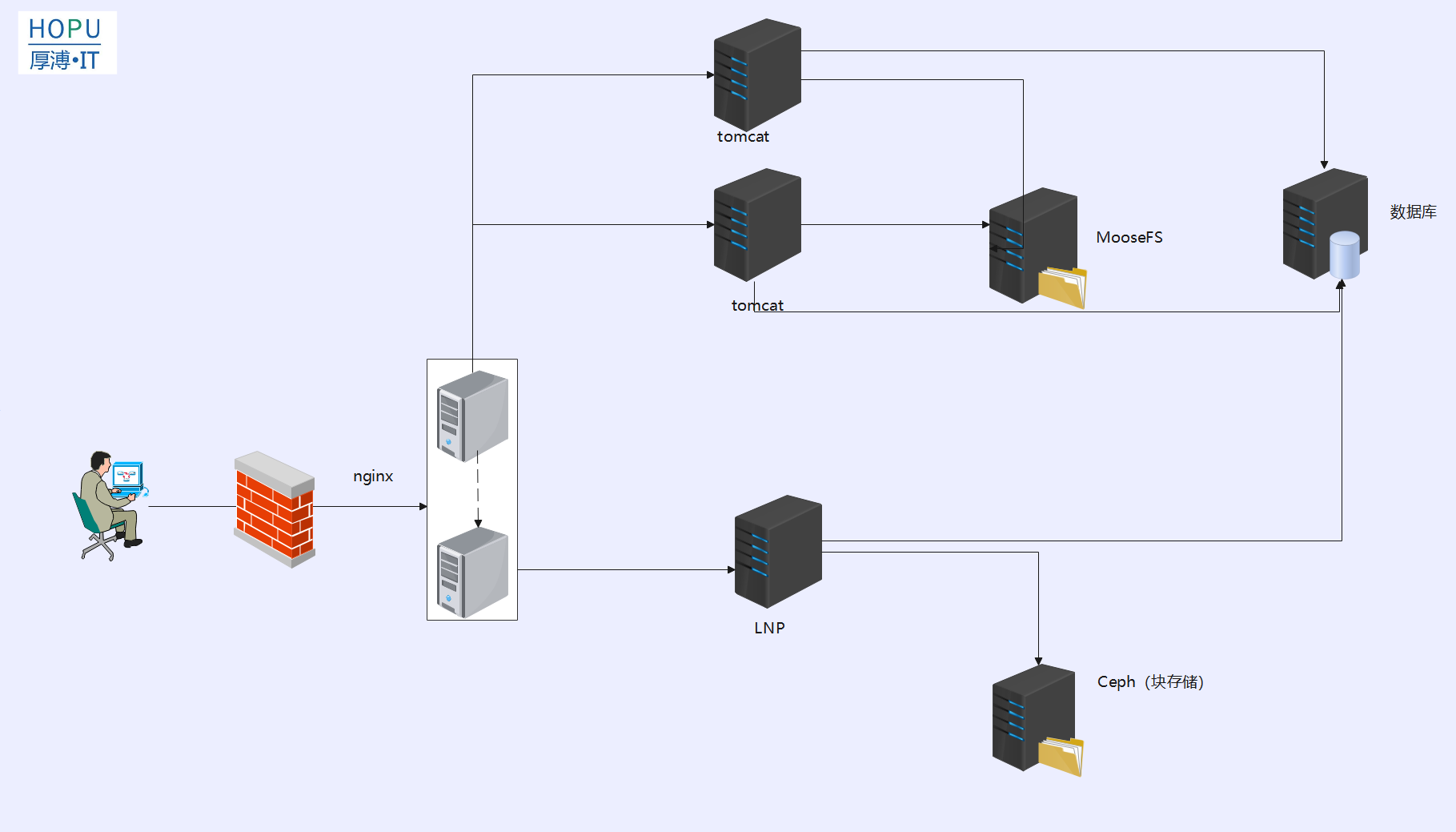

MFS

# 所有节点导入官方的yum仓库和秘钥

curl "http://repository.moosefs.com/MooseFS-3-el7.repo" > /etc/yum.repos.d/MooseFS.repo

curl "https://repository.moosefs.com/RPM-GPG-KEY-MooseFS" > /etc/pki/rpm-gpg/RPM-GPG-KEY-MooseFS

===========================Master===========================

[root@mfs-master~]$ yum install moosefs-master moosefs-cgi moosefs-cgiserv moosefs-cli -y

# 启动服务

[root@mfs-master~]$ systemctl enable --now moosefs-master

# GUI

[root@mfs-master~]$ systemctl enable --now moosefs-cgiserv

# 浏览器访问

http://10.0.0.10:9425

=========================Metalogger=========================

[root@mfs-logger~]$ yum install moosefs-metalogger -y

[root@mfs-logger~]$ vim /etc/mfs/mfsmetalogger.cfg

MASTER_HOST = 10.0.0.10 # 这里写Master服务器的IP地址

[root@mfs-logger~]$ systemctl enable --now moosefs-metalogger

# 查看元数据已经同步过来了

[root@mfs-logger/var/lib/mfs]$ ll

总用量 8

-rw-r-----. 1 mfs mfs 45 12月 22 15:33 changelog_ml_back.0.mfs

-rw-r-----. 1 mfs mfs 0 12月 22 15:33 changelog_ml_back.1.mfs

-rw-r-----. 1 mfs mfs 8 12月 22 15:33 metadata_ml.tmp

==========================Chunkservers=========================

[root@chunkservers-1~]$ yum install moosefs-chunkserver -y

# 创建挂载点

[root@chunkservers-1~]$ mkdir -p /mnt/mfschunks1

# 添加两块20G磁盘

# 磁盘热加载

[root@chunkservers-1~]$ alias scandisk='echo - - - > /sys/class/scsi_host/host0/scan;echo - - - > /sys/class/scsi_host/host1/scan;echo - - - > /sys/class/scsi_host/host2/scan'

[root@chunkservers-1~]$ scandisk

[root@chunkservers-1~]$ lsblk | grep sdb

sdb 8:16 0 20G 0 disk

# 分区

[root@chunkservers-1~]$ fdisk /dev/sdb

n p w

[root@chunkservers-1~]$ lsblk | grep sdb1

└─sdb1 8:17 0 20G 0 part

# 格式化

[root@chunkservers-1~]$ mkfs.xfs /dev/sdb1

meta-data=/dev/sdb1 isize=512 agcount=4, agsize=1310656 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242624, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

# 挂载

[root@chunkservers-1~]$ mount /dev/sdb1 /mnt/mfschunks1/

# 授权

[root@chunkservers-1~]$ chown -R mfs:mfs /mnt/mfschunks1/

[root@chunkservers-1~]$ vim /etc/mfs/mfschunkserver.cfg

MASTER_HOST = 10.0.0.10

[root@chunkservers-1~]$ vim /etc/mfs/mfshdd.cfg

/mnt/mfschunks1

# 启动服务

[root@chunkservers-1~]$ systemctl enable --now moosefs-chunkserver

Created symlink from /etc/systemd/system/multi-user.target.wants/moosefs-chunkserver.service to /usr/lib/systemd/system/moosefs-chunkserver.service.

### chunkservers-2 步骤同上 ###

Tomcat

# 导入官方的yum仓库和秘钥

[root@tomcat-1~]$ curl "http://repository.moosefs.com/MooseFS-3-el7.repo" > /etc/yum.repos.d/MooseFS.repo

[root@tomcat-1~]$ curl "https://repository.moosefs.com/RPM-GPG-KEY-MooseFS" > /etc/pki/rpm-gpg/RPM-GPG-KEY-MooseFS

[root@tomcat-1~]$ yum install -y moosefs-client fuse

# 创建挂载点

[root@tomcat-1~]$ mkdir -p /mnt/mfs

[root@tomcat-1~]$ vim /etc/mfs/mfsmount.cfg

/mnt/mfs # 这里填写挂载点

# 挂载

[root@tomcat-1~]$ mfsmount -H 10.0.0.10

# 设置副本数为 2

[root@tomcat-1~]$ mfssetgoal -r 2 /mnt/mfs

# 查看副本数

[root@tomcat-1~]$ mfsgetgoal /mnt/mfs

/mnt/mfs: 2

# 查看挂载

[root@tomcat-1~]$ df -h | grep /mnt/mfs

10.0.0.10:9421 40G 577M 40G 2% /mnt/mfs

# 配置JDK环境

[root@tomcat-1/mnt/mfs]$ tar xf jdk-8u341-linux-x64.tar.gz -C /usr/local/src/

[root@tomcat-1~]$ cat > /etc/profile.d/jdk.sh <<EOF

JAVA_HOME=/usr/local/src/jdk1.8.0_341

JAVA_BIN=$JAVA_HOME/bin

JRE_HOME=$JAVA_HOME/jre

JRE_BIN=$JRE_HOME/bin

PATH=$JAVA_BIN:$JRE_BIN:$PATH

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export JAVA_HOME JRE_HOME PATH CLASSPATH

EOF

[root@tomcat-1~]$ source /etc/profile.d/jdk.sh

[root@tomcat-1~]$ java -version

java version "1.8.0_341"

Java(TM) SE Runtime Environment (build 1.8.0_341-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.341-b10, mixed mode)

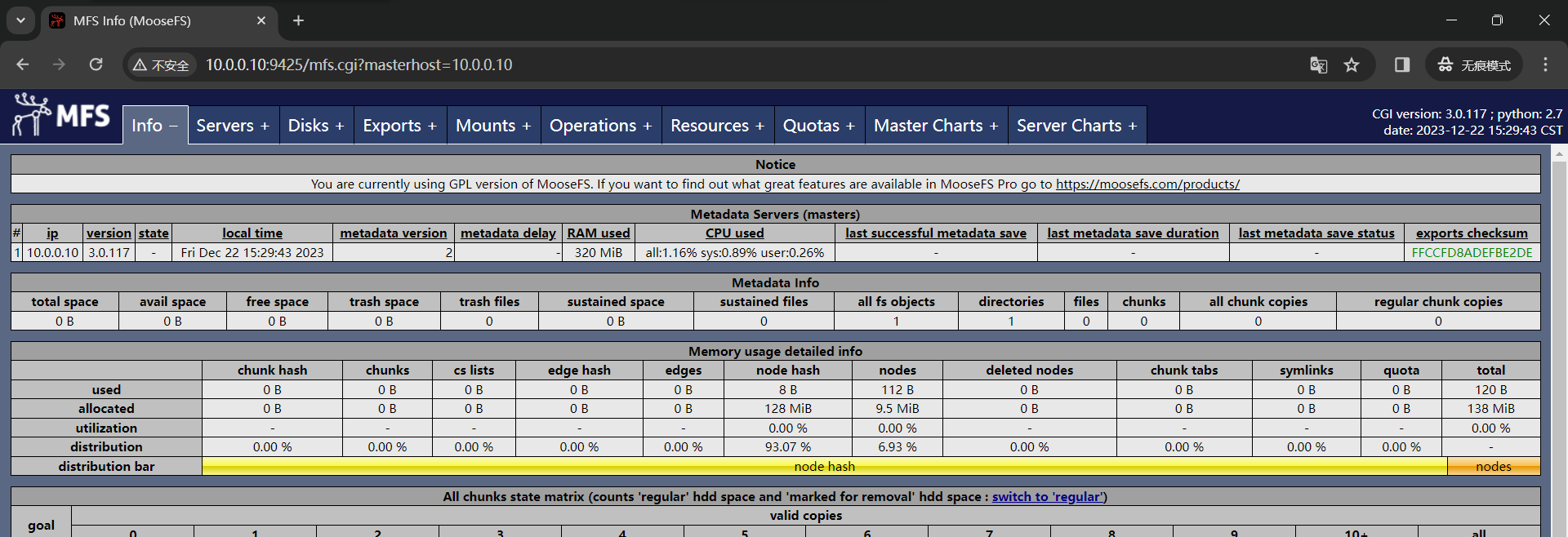

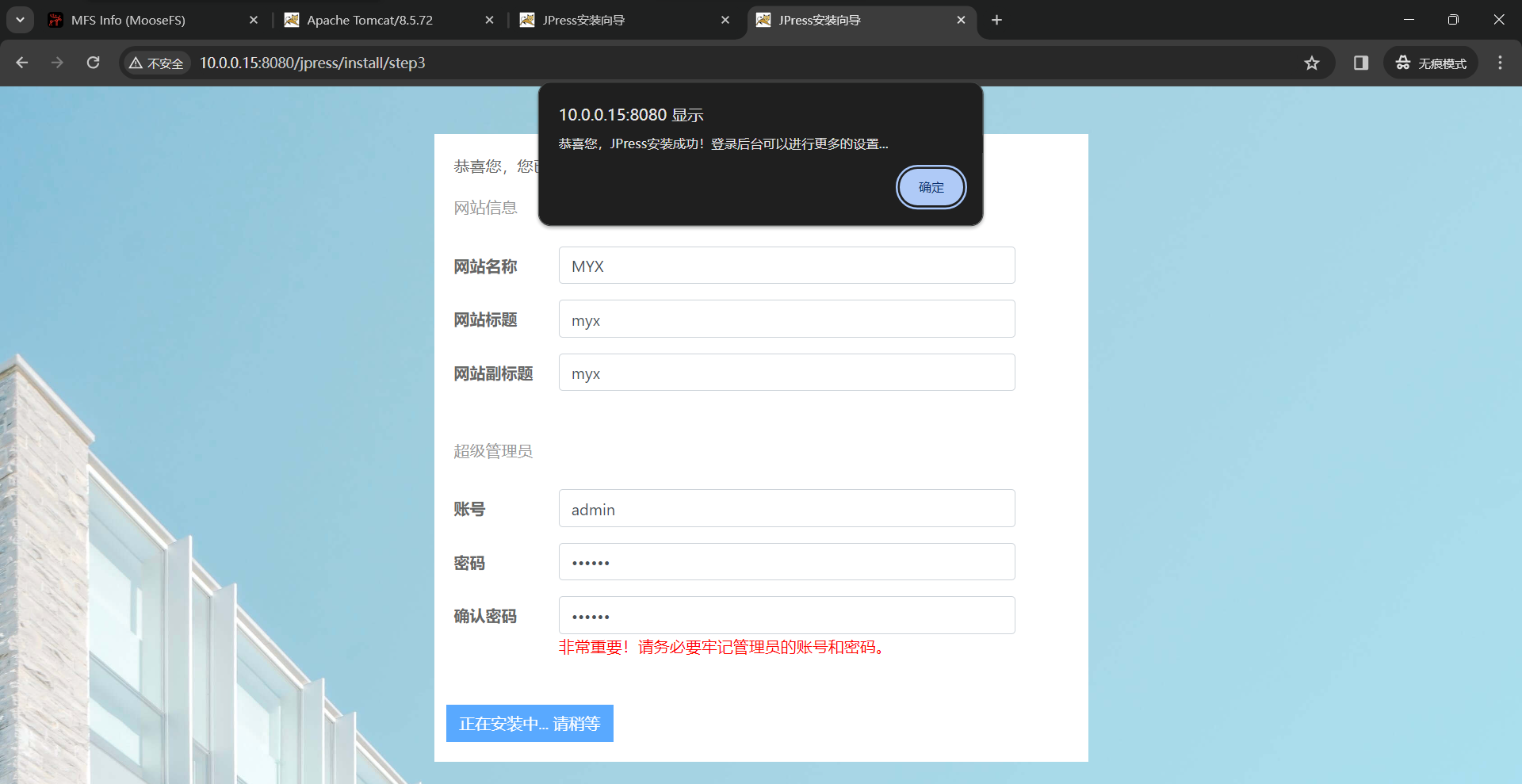

# 部署Tomcat

[root@tomcat-1/mnt/mfs]$ tar xf apache-tomcat-8.5.72.tar.gz -C /usr/local/

# 部署Jpress

[root@tomcat-1/mnt/mfs/jpress]$ jar xf jpress-v4.2.0.war

[root@tomcat-1/mnt/mfs/jpress]$ ls

jpress-v4.2.0.war META-INF robots.txt static templates WEB-INF

[root@tomcat-1/mnt/mfs/jpress]$ ln -s /mnt/mfs/jpress/ /usr/local/apache-tomcat-8.5.72/webapps/

# 启动Tomcat

[root@tomcat-2~]$ /usr/local/apache-tomcat-8.5.72/bin/startup.sh

Using CATALINA_BASE: /usr/local/apache-tomcat-8.5.72

Using CATALINA_HOME: /usr/local/apache-tomcat-8.5.72

Using CATALINA_TMPDIR: /usr/local/apache-tomcat-8.5.72/temp

Using JRE_HOME: /usr/local/src/jdk1.8.0_341/jre

Using CLASSPATH: /usr/local/apache-tomcat-8.5.72/bin/bootstrap.jar:/usr/local/apache-tomcat-8.5.72/bin/tomcat-juli.jar

Using CATALINA_OPTS:

Tomcat started.

# Tomcat-2 步骤同上

Ceph

# 添加各自节点与主机的hosts映射关系

cat >> /etc/hosts << EOF

10.0.0.16 admin

10.0.0.17 node01

10.0.0.18 node02

10.0.0.19 node03

10.0.0.20 client

EOF

# 所有节点安装依赖包

yum -y install epel-release

yum -y install yum-plugin-priorities yum-utils ntpdate python-setuptools python-pip gcc gcc-c++ autoconf libjpeg libjpeg-devel libpng libpng-devel freetype freetype-devel libxml2 libxml2-devel zlib zlib-devel glibc glibc-devel glib2 glib2-devel bzip2 bzip2-devel zip unzip ncurses ncurses-devel curl curl-devel e2fsprogs e2fsprogs-devel krb5-devel libidn libidn-devel openssl openssh openssl-devel nss_ldap openldap openldap-devel openldap-clients openldap-servers libxslt-devel libevent-devel ntp libtool-ltdl bison libtool vim-enhanced python wget lsof iptraf strace lrzsz kernel-devel kernel-headers pam-devel tcl tk cmake ncurses-devel bison setuptool popt-devel net-snmp screen perl-devel pcre-devel net-snmp screen tcpdump rsync sysstat man iptables sudo libconfig git bind-utils tmux elinks numactl iftop bwm-ng net-tools expect snappy leveldb gdisk python-argparse gperftools-libs conntrack ipset jq libseccomp socat chrony sshpass

# admin 与各节点间需要配置免密登录

[root@ceph-admin~]$ ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

[root@ceph-admin~]$ sshpass -p '123456' ssh-copy-id -o StrictHostKeyChecking=no root@admin

[root@ceph-admin~]$ sshpass -p '123456' ssh-copy-id -o StrictHostKeyChecking=no root@node01

[root@ceph-admin~]$ sshpass -p '123456' ssh-copy-id -o StrictHostKeyChecking=no root@node02

[root@ceph-admin~]$ sshpass -p '123456' ssh-copy-id -o StrictHostKeyChecking=no root@node03

# 所有节点之间需要做时间同步

systemctl restart chronyd

systemctl enable --now chronyd

systemctl status chronyd

timedatectl set-ntp true #开启 NTP

timedatectl set-timezone Asia/Shanghai #设置时区

chronyc -a makestep #强制同步下系统时钟

timedatectl status #查看时间同步状态

chronyc sources -v #查看 ntp 源服务器信息

timedatectl set-local-rtc 0 #将当前的UTC时间写入硬件时钟

# 重启

systemctl restart rsyslog

systemctl restart crond

# 所有节点上配置 Ceph yum 源

wget https://download.ceph.com/rpm-nautilus/el7/noarch/ceph-release-1-1.el7.noarch.rpm --no-check-certificate

rpm -ivh ceph-release-1-1.el7.noarch.rpm --force

sed -i 's#download.ceph.com#mirrors.tuna.tsinghua.edu.cn/ceph#' /etc/yum.repos.d/ceph.repo

# 重启所有主机

sync

poweroff

Ceph-node

# 各添加一个网卡和一个20G磁盘

[root@node-01~]$ hostname -I

10.0.0.17 10.0.10.17

[root@node-02~]$ hostname -I

10.0.0.18 10.0.10.18

[root@node-03~]$ hostname -I

10.0.0.19 10.0.10.19

# 添加的磁盘

[root@node-01~]$ ll /dev/sdb

brw-rw----. 1 root disk 8, 16 12月 22 19:32 /dev/sdb

[root@node-02~]$ ll /dev/sdb

brw-rw----. 1 root disk 8, 16 12月 22 19:32 /dev/sdb

[root@node-03~]$ ll /dev/sdb

brw-rw----. 1 root disk 8, 16 12月 22 19:32 /dev/sdb

# 所有节点创建工作目录

mkdir -p /etc/ceph

# admin 节点安装ceph-deploy部署工具

[root@ceph-admin~]$ cd /etc/ceph

[root@ceph-admin/etc/ceph]$ yum install -y ceph-deploy

# 看到这个则证明安装成功

[root@ceph-admin/etc/ceph]$ ceph-deploy --version

2.0.1

# 在管理节点为其它节点安装Ceph软件包

[root@ceph-admin/etc/ceph]$ ceph-deploy install --release nautilus node0{1..3} admin

# 告诉 ceph-deploy 哪些是 mon 监控节点

[root@ceph-admin/etc/ceph]$ ceph-deploy new --public-network 10.0.0.0/24 --cluster-network 10.0.10.0/24 node01 node02 node03

# 在管理节点初始化 mon 节点

[root@ceph-admin/etc/ceph]$ ceph-deploy mon create node01 node02 node03

[root@ceph-admin/etc/ceph]$ ceph-deploy --overwrite-conf mon create-initial

# 在mon节点上查看自动开启的mon进程;

[root@admin/etc/ceph]$ ps aux | grep ceph

root 23292 0.0 0.9 189260 9204 ? Ss 11:33 0:00 /usr/bin/python2.7 /usr/bin/ceph-crash

root 24199 0.0 0.0 112824 980 pts/0 S+ 11:40 0:00 grep --color=auto ceph

# 在admin管理节点中,查看Ceph集群状态

[root@admin/etc/ceph]$ ceph -s

cluster:

id: 397e9605-7edb-4bd4-b1df-f8adbc4ffddc

health: HEALTH_WARN

mons are allowing insecure global_id reclaim

services:

mon: 3 daemons, quorum node01,node02,node03 (age 15m)

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

# 在admin管理节点中,查看mon集群选举的情况

[root@admin/etc/ceph]$ ceph quorum_status --format json-pretty | grep leader

"quorum_leader_name": "node01",

# 在admin节点中,添加osd节点

ceph-deploy --overwrite-conf osd create node01 --data /dev/sdb

ceph-deploy --overwrite-conf osd create node02 --data /dev/sdb

ceph-deploy --overwrite-conf osd create node03 --data /dev/sdb

# 在admin节点中,查看ceph集群状态

[root@admin/etc/ceph]$ ceph -s

cluster:

id: 397e9605-7edb-4bd4-b1df-f8adbc4ffddc

health: HEALTH_WARN

no active mgr

mons are allowing insecure global_id reclaim

services:

mon: 3 daemons, quorum node01,node02,node03 (age 18m)

mgr: no daemons active

osd: 3 osds: 3 up (since 11s), 3 in (since 11s)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

# 部署能够管理Ceph集群的节点

[root@admin/etc/ceph]$ ceph-deploy --overwrite-conf config push node01 node02 node03

# 在admin节点上,查看ceph集群的详细信息

[root@admin/etc/ceph]$ ceph osd stat

3 osds: 3 up (since 46s), 3 in (since 46s); epoch: e13

[root@admin/etc/ceph]$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.05846 root default

-3 0.01949 host node01

0 hdd 0.01949 osd.0 up 1.00000 1.00000

-5 0.01949 host node02

1 hdd 0.01949 osd.1 up 1.00000 1.00000

-7 0.01949 host node03

2 hdd 0.01949 osd.2 up 1.00000 1.00000

[root@admin/etc/ceph]$ rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

total_objects 0

total_used 0 B

total_avail 0 B

total_space 0 B

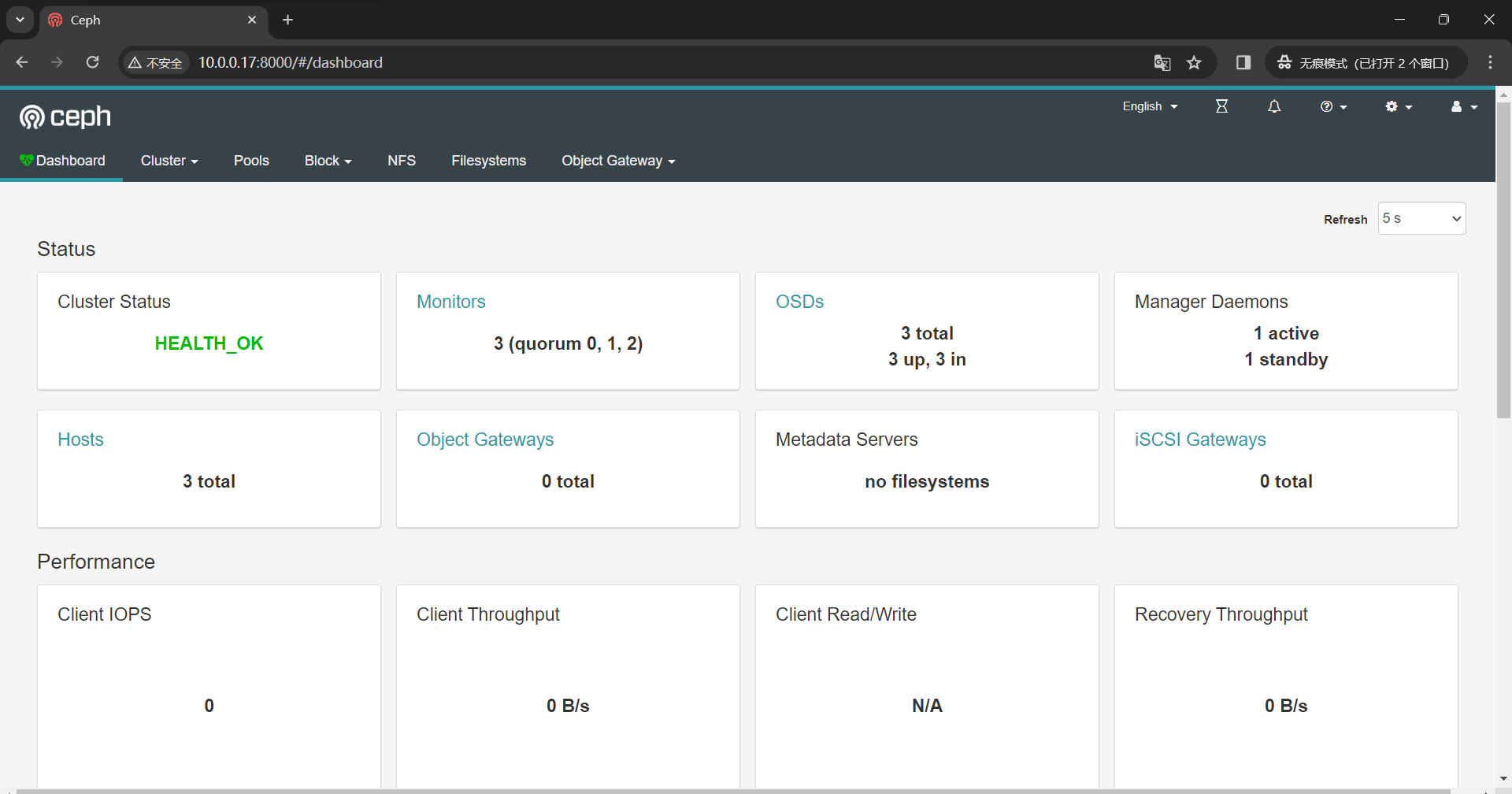

# 部署mgr节点

[root@admin/etc/ceph]$ ceph-deploy mgr create node01 node02

# 禁用不安全模式

[root@admin/etc/ceph]$ ceph config set mon auth_allow_insecure_global_id_reclaim false

[root@admin/etc/ceph]$ ceph -s

cluster:

id: 397e9605-7edb-4bd4-b1df-f8adbc4ffddc

health: HEALTH_OK # 变成OK,就是禁用成功

services:

mon: 3 daemons, quorum node01,node02,node03 (age 20m)

mgr: node01(active, since 63s), standbys: node02

osd: 3 osds: 3 up (since 2m), 3 in (since 2m)

task status:

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 57 GiB / 60 GiB avail

pgs:

# 查看 OSD 状态

[root@admin/etc/ceph]$ ceph osd status

+----+--------+-------+-------+--------+---------+--------+---------+-----------+

| id | host | used | avail | wr ops | wr data | rd ops | rd data | state |

+----+--------+-------+-------+--------+---------+--------+---------+-----------+

| 0 | node01 | 1025M | 18.9G | 0 | 0 | 0 | 0 | exists,up |

| 1 | node02 | 1025M | 18.9G | 0 | 0 | 0 | 0 | exists,up |

| 2 | node03 | 1025M | 18.9G | 0 | 0 | 0 | 0 | exists,up |

+----+--------+-------+-------+--------+---------+--------+---------+-----------+

[root@admin/etc/ceph]$ ceph osd df

ID CLASS WEIGHT REWEIGHT SIZE RAW USE DATA OMAP META AVAIL %USE VAR PGS STATUS

0 hdd 0.01949 1.00000 20 GiB 1.0 GiB 1.8 MiB 0 B 1 GiB 19 GiB 5.01 1.00 0 up

1 hdd 0.01949 1.00000 20 GiB 1.0 GiB 1.8 MiB 0 B 1 GiB 19 GiB 5.01 1.00 0 up

2 hdd 0.01949 1.00000 20 GiB 1.0 GiB 1.8 MiB 0 B 1 GiB 19 GiB 5.01 1.00 0 up

TOTAL 60 GiB 3.0 GiB 5.2 MiB 0 B 3 GiB 57 GiB 5.01

MIN/MAX VAR: 1.00/1.00 STDDEV: 0

# 开启监控模块,在ceph-mgr Active节点执行命令开启

[root@node01/etc/ceph]$ ceph -s | grep mgr

mgr: node01(active, since 7m), standbys: node02

[root@node01/etc/ceph]$ yum reinstall -y ceph-mgr-dashboard

[root@node01/etc/ceph]$ ceph mgr module ls | grep dashboard

"dashboard",

# 开启 dashboard 模块

[root@node01/etc/ceph]$ ceph mgr module enable dashboard --force

# 禁用 dashboard 的 ssl 功能

[root@node01/etc/ceph]$ ceph mgr module enable dashboard --force

# 配置 dashboard 监听的地址和端口

[root@node01/etc/ceph]$ ceph config set mgr mgr/dashboard/server_addr 0.0.0.0

[root@node01/etc/ceph]$ ceph config set mgr mgr/dashboard/server_port 8000

# 重启 dashboard

[root@node01/etc/ceph]$ ceph mgr module disable dashboard

[root@node01/etc/ceph]$ ceph mgr module enable dashboard --force

# 确认访问 dashboard 的 url

[root@node01/etc/ceph]$ ceph mgr services

{

"dashboard": "http://node01:8000/"

}

# 设置dashboard账户以及密码

[root@node01/etc/ceph]$ echo "123" > dashboard_passwd.txt

[root@node01/etc/ceph]$ ceph dashboard set-login-credentials admin -i dashboard_passwd.txt

******************************************************************

*** WARNING: this command is deprecated. ***

*** Please use the ac-user-* related commands to manage users. ***

******************************************************************

Username and password updated

# 浏览器访问:http://10.0.0.17:8000 ,账号密码为 admin/123

# 创建存储池

[root@node01/etc/ceph]$ ceph osd pool create rbd-demo 64 64

# 将存储池转换为 RBD 模式

[root@node01/etc/ceph]$ ceph osd pool application enable rbd-demo rbd

# 初始化存储池

[root@node01/etc/ceph]$ rbd pool init -p rbd-demo

# 创建镜像

[root@node01/etc/ceph]$ rbd create rbd-demo/rbd-demo1.img --size 1G

# 查看镜像

[root@node01/etc/ceph]$ rbd ls -l -p rbd-demo

NAME SIZE PARENT FMT PROT LOCK

rbd-demo1.img 1 GiB 2

# 修改镜像大小

[root@node01/etc/ceph]$ rbd resize -p rbd-demo --image rbd-demo1.img --size 2G

Resizing image: 100% complete...done.

[root@node01/etc/ceph]$ rbd ls -l -p rbd-demo

NAME SIZE PARENT FMT PROT LOCK

rbd-demo1.img 2 GiB 2

#删除镜像

# rbd remove rbd-demo/rbd-demo1.img

# Linux 配置客户端使用

# 对Mon有只读的权限

[root@admin/etc/ceph]$ ceph auth get-or-create client.osd-mount osd "allow * pool=rbd-demo" mon "allow r" > /etc/ceph/ceph.client.osd-mount.keyring

# 修改RBD镜像特性,CentOS7默认情况下只支持layering和striping特性,需要将其它的特性关闭

[root@admin/etc/ceph]$ rbd feature disable rbd-demo/rbd-demo1.img object-map,fast-diff,deep-flatten

# 将用户的keyring文件和ceph.conf文件发送到客户端的/etc/ceph目录下

[root@admin/etc/ceph]$ scp ceph.client.osd-mount.keyring ceph.conf root@client:/etc/ceph

# 客户端【LNP】的操作

[root@lnp~]$ yum install -y ceph-common

# 执行客户端映射

[root@lnp/etc/ceph]$ cd /etc/ceph

[root@lnp/etc/ceph]$ rbd map rbd-demo/rbd-demo1.img --keyring /etc/ceph/ceph.client.osd-mount.keyring --user osd-mount

/dev/rbd0

# 查看映射

[root@lnp/etc/ceph]$ rbd showmapped

id pool image snap device

0 rbd-demo rbd-demo1.img - /dev/rbd0

# 断开映射

# rbd unmap rbd-demo/rbd-demo1.img

# 格式化并挂载

[root@lnp/etc/ceph]$ mkfs.xfs /dev/rbd0

[root@lnp/etc/ceph]$ mkdir /data/rbd01 -p

[root@lnp/etc/ceph]$ mount /dev/rbd0 /data/rbd01

[root@lnp/etc/ceph]$ df -h | grep /data/rbd01

/dev/rbd0 2.0G 33M 2.0G 2% /data/rbd01

# 在线扩容

在管理节点调整镜像的大小

[root@admin/etc/ceph]$ rbd resize rbd-demo/rbd-demo1.img --size 5G

Resizing image: 100% complete...done.

# 客户端刷新,再次查看

[root@lnp/etc/ceph]$ xfs_growfs /dev/rbd0

[root@lnp/etc/ceph]$ df -h | grep /data/rbd01

/dev/rbd0 5.0G 33M 5.0G 1% /data/rbd01

LNP

# 源码编译所需依赖

yum -y install make cmake gcc gcc-c++ flex bison file libtool libtool-libs autoconf kernel-devel libjpeg libjpeg-devel libpng libpng-devel gd freetype freetype-devel libxml2 libxml2-devel zlib zlib-devel glib2 glib2-devel bzip2 bzip2-devel libevent ncurses ncurses-devel curl curl-devel e2fsprogs e2fsprogs-devel krb5-devel libidn libidn-devel openssl openssl-devel gettext gettext-devel ncurses-devel gmp-devel unzip libcap lsof epel-release autoconf automake pcre-devel libxml2-devel

# 下载pcre:https://ftp.pcre.org/pub/pcre/

[root@lnp/usr/local/src]$ ls

nginx-1.24.0.tar.gz pcre-8.41.tar.gz php-7.1.10.tar.gz

[root@lnp/usr/local/src]$ tar xf pcre-8.41.tar.gz

[root@lnp/usr/local/src]$ useradd -M -s /sbin/nologin nginx

[root@lnp/usr/local/src]$ tar zxvf nginx-1.24.0.tar.gz -C /usr/local/src/

[root@lnp/usr/local/src]$ cd nginx-1.18.0/

[root@lnp/usr/local/src/nginx-1.24.0]$ ./configure --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module --with-http_v2_module --with-http_gunzip_module --with-http_sub_module --with-http_flv_module --with-http_mp4_module --with-pcre=/usr/local/src/pcre-8.41 --user=nginx --group=nginx

[root@lnp/usr/local/src/nginx-1.24.0]$ make && make install

[root@lnp/usr/local/src/nginx-1.24.0]$ ln -s /usr/local/nginx/sbin/nginx /usr/sbin/nginx

[root@lnp/usr/local/src/nginx-1.24.0]$ nginx -v

nginx version: nginx/1.24.0

# 安装 PHP

# 使用yum直接安装或源码编译安装

[root@lnp/usr/local/src/php-7.1.10]$ yum install php php-mysql php-gd php-mbstring -y

[root@lnp/usr/local/src]$ tar xf php-7.1.10.tar.gz

[root@lnp/usr/local/src]$ ls

nginx-1.24.0 pcre-8.41 php-7.1.10

nginx-1.24.0.tar.gz pcre-8.41.tar.gz php-7.1.10.tar.gz

[root@lnp/usr/local/src]$ cd php-7.1.10/

[root@lnp/usr/local/src/php-7.1.10]$ ./configure --prefix=/usr/local/php/ --with-config-file-path=/usr/local/php/etc/ --with-mysqli=/usr/local/src/mysql/bin/mysql_config --enable-soap --enable-mbstring=all --enable-sockets --with-pdo-mysql=/usr/local/src/mysql --with-gd --without-pear --enable-fpm

[root@lnp/usr/local/src/php-7.1.10]$ make && make install

# 生成配置文件

[root@lnp/usr/local/src/php-7.1.10]$ cp php.ini-production /usr/local/php/etc/php.ini

[root@lnp/usr/local/php/bin]$ ln -s /usr/local/php/bin/* /usr/local/bin/

[root@lnp/usr/local/php/bin]$ ln -s /usr/local/php/sbin/* /usr/local/sbin/

# Nginx 连接PHP

[root@lnp/usr/local/php/bin]$ cd /usr/local/php/etc/

[root@lnp/usr/local/php/etc]$ cp -a php-fpm.conf.default php-fpm.conf

# 修改php-fpm配置文件

[root@lnp/usr/local/php/etc]$ vim php-fpm.conf

pid = run/php-fpm.pid

[root@lnp/usr/local/php/etc]$ cd /usr/local/php/etc/php-fpm.d/

[root@lnp/usr/local/php/etc/php-fpm.d]$ cp www.conf.default www.conf

# 修改用户和组的指定用户

[root@lnp/usr/local/php/etc/php-fpm.d]$ vim www.conf

user = nginx

group = nginx

# 创建Nginx和php的服务启动脚本

[root@lnp/usr/local/php/etc/php-fpm.d]$ vim /etc/init.d/nginx_php

#!/bin/bash

ngxc="/usr/local/nginx/sbin/nginx"

pidf="/usr/local/nginx/logs/nginx.pid"

ngxc_fpm="/usr/local/php/sbin/php-fpm"

pidf_fpm="/usr/local/php/var/run/php-fpm.pid"

case "$1" in

start)

$ngxc -t &> /dev/null

if [ $? -eq 0 ];then

$ngxc

$ngxc_fpm

echo "nginx service start success!"

else

$ngxc -t

fi

;;

stop)

kill -s QUIT $(cat $pidf)

kill -s QUIT $(cat $pidf_fpm)

echo "nginx service stop success!"

;;

restart)

$0 stop

$0 start

;;

reload)

$ngxc -t &> /dev/null

if [ $? -eq 0 ];then

kill -s HUP $(cat $pidf)

kill -s HUP $(cat $pidf_fpm)

echo "reload nginx config success!"

else

$ngxc -t

fi

;;

*)

echo "please input stop|start|restart|reload."

exit 1

esac

# 给执行权限

chmod a+x nginx_php.sh

# 修改 **Nginx**的配置文件,使其识别**.php**后缀的文件

vim /usr/local/nginx/conf/nginx.conf

location ~ \.php$ {

root html;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

include fastcgi.conf;

}

# 添加php的测试页

[root@lnmp html]# cat index.php

<?php

phpinfo();

?>

# 启动Nginx和php

/etc/init.d/nginx_php start

# 查看端口

[root@lnp/usr/local/php/etc/php-fpm.d]$ netstat -antup | grep 9000 && netstat -antup | grep 80

tcp 0 0 127.0.0.1:9000 0.0.0.0:* LISTEN 7694/php-fpm: maste

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 7691/nginx: master

# 部署Discuz 论坛

[root@lnp/usr/local/nginx/conf]$ vim nginx.conf

location / {

root html;

index index.php index.htm;

}

[root@lnp/data/rbd01]$ if [ -f /usr/bin/curl ];then curl -sSLO https://www.discuz.vip/install/X3.4.sh;else wget -O X3.4.sh https://www.discuz.vip/install/X3.4.sh;fi;bash X3.4.sh

--------------------

Discuz! X 安装文件下载

--------------------

开始下载 Discuz! X 前,确认你的域名已经解析绑定好,请填写域名绑定的目录: .

[root@lnp/usr/local/nginx]$ ln -s /data/rbd01 /usr/local/nginx/html

# 重启服务

[root@lnp/usr/local/nginx/html]$ /etc/init.d/nginx_php restart

nginx service stop success!

nginx service start success!

http://10.0.0.20/install/

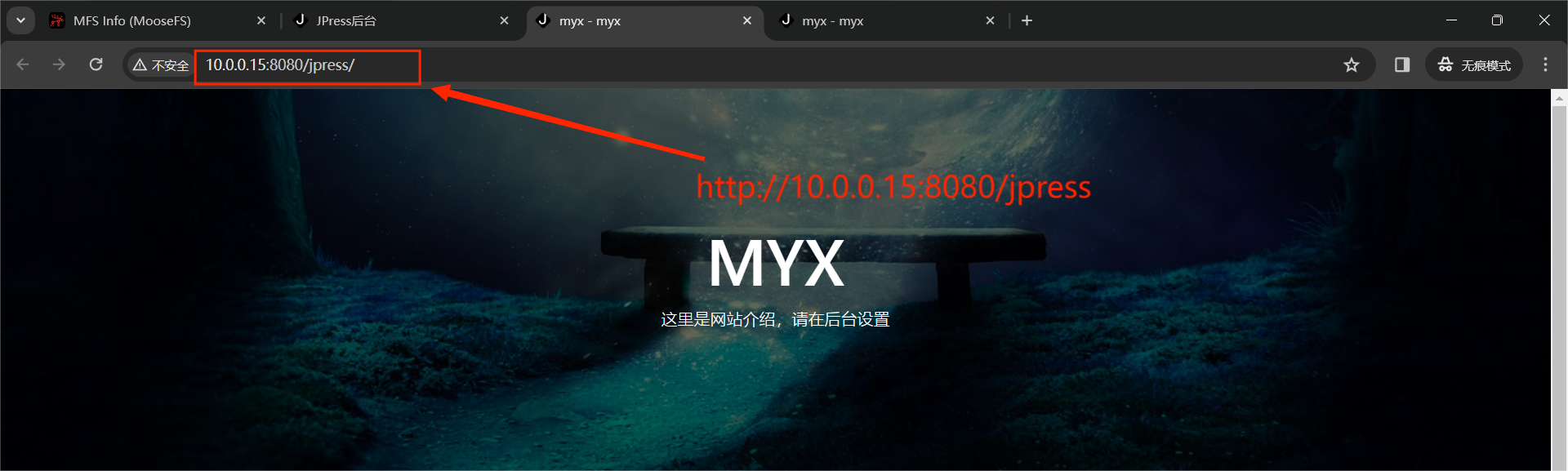

Nginx 反向代理

[root@nginx-1/apps/nginx/conf]$ vim nginx.conf

server {

listen 80;

server_name www.jpress.com;

location / {

proxy_pass http://10.0.0.15:8080;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

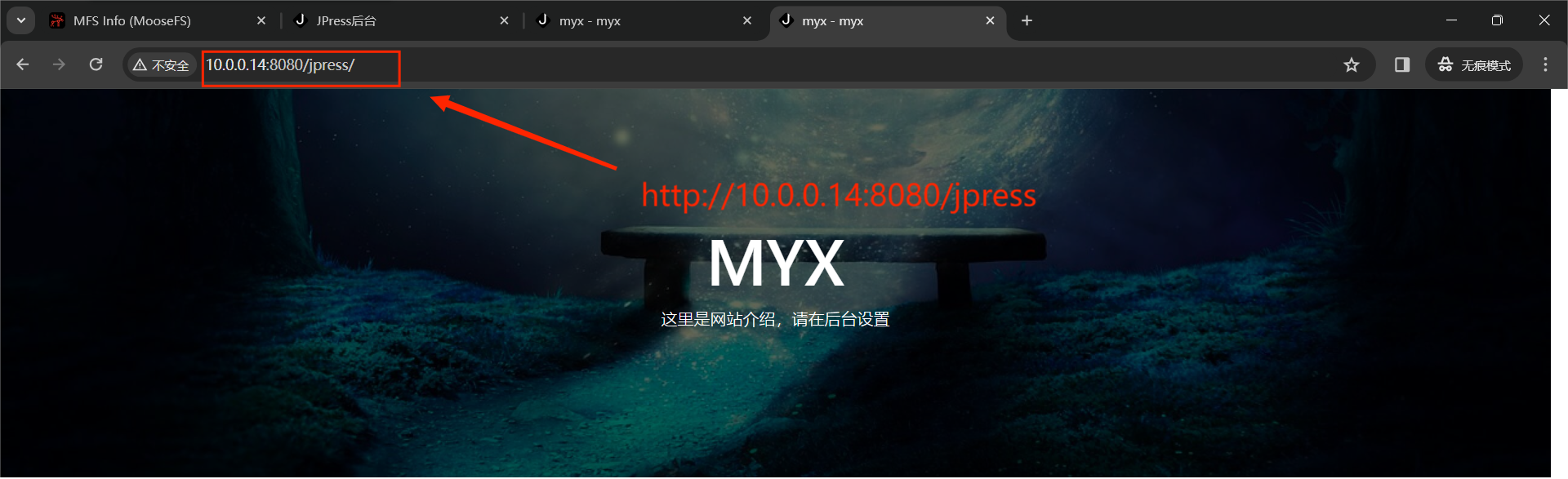

[root@nginx-2/apps/nginx/conf]$ vim nginx.conf

server {

listen 80;

server_name www.discuz.com;

location / {

proxy_pass http://10.0.0.20;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# 本地添加host解析

10.0.0.21 www.jpress.com

10.0.0.22 www.discuz.com

IPtables 防火墙策略

# 开启路由转发

[root@iptables~]$ vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

# 验证

[root@iptables~]$ sysctl -p

net.ipv4.ip_forward = 1

[root@iptables~]$ iptables -A INPUT -i ens32 -p tcp -m multiport --dport 22,53,80,443 -j ACCEPT

[root@iptables~]$ iptables -A OUTPUT -o ens32 -p tcp -m multiport --sport 22,53,80,443 -j ACCEPT

[root@iptables~]$ iptables -A INPUT -i ens32 -p tcp -j DROP

- 感谢你赐予我前进的力量

赞赏者名单

因为你们的支持让我意识到写文章的价值🙏

本文是原创文章,采用 CC BY-NC-ND 4.0 协议,完整转载请注明来自 梦缘羲

评论

匿名评论

隐私政策

你无需删除空行,直接评论以获取最佳展示效果